Introduction

When working with the CROSSCON Hypervisor on the RPi4, we found ourselves

needing a more stable and reliable setup for building the CROSSCON stack.

In it’s simplest form, the

provided demo

relies on a Buildroot initramfs.

We quickly realized that a lot of the things that we want to do with the stack (for example building and testing TA-related applications, or security tests of the Hypervisor) would be a lot easier if we had full rootfs access.

It would also make reproduction, future integration and adding new tools easier, if we based the demo off of our existing OS, Zarhus.

This is where the idea for Zarhus integration into the CROSSCON stack was born.

Note: This content is mostly geared toward junior-mid level embedded systems developers. If you are a senior, there’s a high chance that a good portion of the content might seem trivial to you.

Also, if you already know what CROSSCON and it’s Hypervisor are, feel free to jump ahead to this section, where I delve deeper into the reasons for this integration, or even straight ahead into this section if you’re more interested in the technical challenges and how I solved them.

What is CROSSCON?

Modern IoT systems increasingly require robust security while supporting various hardware platforms. Enter CROSSCON, which stands for Cross-platform Open Security Stack for Connected Devices. CROSSCON is a consortium-based (part of which is 3mdeb) project aimed at delivering an open, modular, portable, and vendor-agnostic security stack for IoT.

Its goal: ensure devices within any IoT ecosystem meet essential security standards, preventing attackers from turning smaller or more vulnerable devices into easy entry points.

Here you can find the links to CROSSCON repositories, which contain the software used to achieve what is mentioned above:

- this repository

contains demos of the CROSSCON stack for various platforms, including

QEMUand theRPi4. - the CROSSCON Hypervisor is

probably the most important component - during the default demo for the

RPi4, it is compiled with a config that includes anOPTEE-OSVM, and a linux VM.

The CROSSCON project’s website is a good resource for learning about the project’s goals. The use-cases page contains a great overview of the exact features that the stack has, including it’s security and quality of life applications.

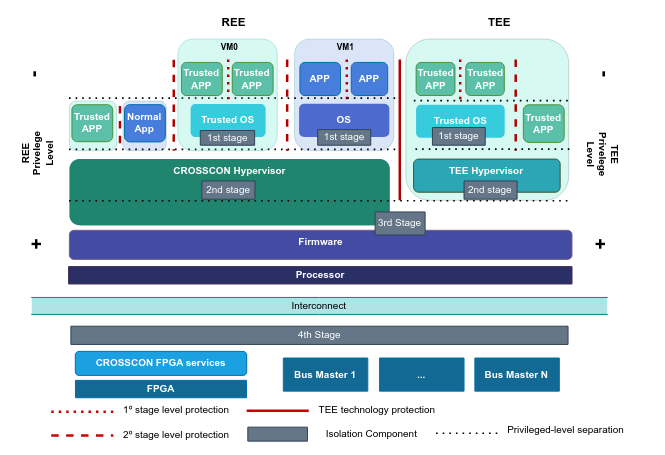

The publications page has interesting papers from members of the consortium about the project and the challenges it will face - I recommend reading this one, which gives a good overview of the whole project. This image illustrates how the whole stack interacts together:

If you’ve worked with OpenXT before, you might notice some similarities between it and CROSSCON. They’re both open-source, and aim to provide security and isolation.

That being said, they’re trying to achieve this in different environments -

OpenXT is primarily geared toward x86 hardware and relies on the Xen

hypervisor, whereas CROSSCON builds on the

Bao hypervisor, with a strong

emphasis on ARM. CROSSCON is also designed with

Trusted Execution Environments in mind - something which OpenXT doesn’t go

out of its way to support. While there is a conceptual overlap between

these two projects, they operate in entirely different ecosystems.

The CROSSCON Hypervisor

The CROSSCON Hypervisor is a key piece of CROSSCON’s open security stack. It builds upon the Bao hypervisor, a lightweight static-partitioning hypervisor offering strong isolation and real-time guarantees. Below are some highlights of its architecture and capabilities:

Static Partitioning and Isolation

The Bao foundation provides static partitioning of resources - CPUs, memory, and I/O - among multiple virtual machines (VMs). This approach ensures that individual VMs do not interfere with one another, improving fault tolerance and security. Each VM has dedicated hardware resources:

- Memory: Statically assigned using two-stage translation.

- Interrupts: Virtual interrupts are mapped one-to-one with physical interrupts.

- CPUs: Each VM can directly control its allocated CPU cores without a conventional scheduler.

Dynamic VM Creation

To broaden applicability in IoT scenarios, CROSSCON Hypervisor introduces a dynamic VM creation feature. Instead of being fixed at boot, new VMs can be instantiated during runtime using the VM-stack mechanism and a hypervisor call interface. A host OS driver interacts with the Hypervisor to pass it a configuration file, prompting CROSSCON Hypervisor to spawn the child VM. During this process, resources - aside from the CPUs - are reclaimed from the parent VM and reassigned to the newly created VM, ensuring isolation between VMs.

Per-VM Trusted Execution Environment (TEE)

CROSSCON Hypervisor also supports per-VM TEE services by pairing each guest OS with its own trusted environment. This approach leverages OP-TEE (both on Arm and RISC-V architectures) so that even within the “normal” world, multiple trusted OS VMs can run safely in isolation.

Why it’s Convenient to Have Zarhus on the Hypervisor

During our time working with the

default demo for the RPi4,

our team ran into a couple of problems, mainly relating to the bare-bones nature

of the provided Buildroot initramfs environment - while handy for

proof-of-concept, that environment lacks many essential development tools,

such as compilers, linkers, and other utilities that embedded engineers often

need. Any time I wanted to:

- Execute tests

- Gather logs

- Change the configuration of the build

it required cross-compiling the tools and assets that I need, which is

cumbersome. The lack of a rootfs can make working with trusted apps difficult,

especially when it comes to making sure that they interact with the OPTEE-OS

(in the second VM) properly.

This was especially true when working on TA’s - development of TA’s required many different libraries, all of which required either cross compilation, or manual implementation of the provided standard. This was very cumbersome and slowed down progress a lot.

We also have limited operational familiarity with Buildroot. Our team has a lot of day-to-day experience customizing and extending Yocto based systems. Although it would be possible to expand the existing Buildroot setup, we thought we would find ourselves spending a significant amount of time integrating each needed tool or library. In contrast, working with Yocto (Zarhus in particular) would let us leverage existing recipes and a development model we already know inside-out, allowing us to focus on improving/testing the CROSSCON stack, rather than wrestling with the build environment.

It was then I realized that I could combine the existing process for booting

the CROSSCON Hypervisor on the RPi4 with our Yocto-based OS, Zarhus. This

would eliminate our previous problems, and speed up testing and working with

the Hypervisor, due to the immediate availability of compilers, linkers and

other tools, as well as having a rootfs at our disposal.

Bringing Zarhus to the CROSSCON Hypervisor significantly boosts development and testing convenience, especially on the Raspberry Pi 4:

-

Full Toolchain Availability: With Zarhus, I would gain out-of-the-box compilers, linkers, and more. This would be a major improvement over the limited

Buildrootinitramfs environment. -

Faster Iteration: I could build and test software entirely within the guest environment - without a need to rely on external cross-compilation or complicated host setups.

-

Complete Rootfs Mounting: With Zarhus mounting a full filesystem, I could easily install additional tools through

Yocto, manage logs, and run services in ways that would be impossible or extremely cumbersome in a minimal initramfs environment.

With Zarhus, we could have a recipe for the TA’s, that has access to all of the needed libraries, and the dependencies of that app can be easily added to the environment.

How to Build Zarhus for the CROSSCON Hypervisor

The initial idea was simple: the Hypervisor is built based on a config file, that specifies things like:

- How many VM’s there are

- What their interrupts are

- The VM’s access to memory

- Shared memory addresses

- etc…

In that file I could see that each VM has an image on which it is built. The linux VM (the one that interests me) is specified here:

|

|

That path points to an image built with lloader, and that image contains

the linux kernel and the device tree file. This is done during

step 9

of the demo.

I realized that I could swap that linux kernel for one automatically generated

in our Yocto build environment. This initially didn’t work - Yocto by

default builds a zImage - a compressed version of the kernel that is

self-extracting, whereas I needed an Image kernel - the generic binary image.

This was a quick fix in the Yocto build environment, with this line added:

|

|

So I have my kernel already - but what about the rest? Well I figured out

that thanks to

this commit,

the whole SD card is already exposed - I just have to put my rootfs there

and give the kernel info on how to mount it.

The demo relies on a manually partitioned SD card, which contains one partition - with everything needed to run the demo.

Since Yocto provides us with .wic.bmap and .wic.gz files already, I have

decided to use them. By flashing our SD card with those files, I would have

an SD card with two partitions, /boot and /root - all I have to do is

after flashing, remove everything from the /boot partition and replace it

with the firmware and the Hypervisor file (that already contains our kernel).

It was at this point where I thought I had done everything, and that the configuration should work. But I quickly ran into many problems that I had to fix, some of them being:

Picking the wrong Yocto build target

When building Zarhus for this setup, it’s important to note that there are two

very similar targets for the RPi4:

raspberrypi4- builds a 32-bit version of the systemraspberrypi4-64- builds a 64-bit version of the system

When initially trying to get all of this to work, I made an oversight which cost me a couple of hours of painstaking debugging - I mistakenly was choosing a target which builds a 32-bit version of the system, whereas the CROSSCON Hypervisor setup is designed to work on 64-bit systems.

Trying to get a 32-bit version to work here results in getting an abort error from the Hypervisor, after it’s done assigning interrupts:

|

|

This error wasn’t very informative, and it gave us a hard time trying to find

the solution, which came seemingly out of nowhere. I was trying to recompile

the kernel manually within the Yocto build environment using devtool, when

I noticed that the appropriate toolchains weren’t there.

That gave me a clue that this could be a 32-bit system instead of 64, and a quick search online confirmed this.

Problems with serial output

After fixing the system to be a 64-bit version, I finally managed to get some logs from the kernel booting:

|

|

but that was the end of the output - it seemed to freeze. Once I noticed where it was freezing:

|

|

I knew there was some sort of serial console issue. I suspected that the system was booting normally and without errors, and just not printing the output because of an unconfigured console.

Adding this console=ttyS1,115200 to bootargs in the device tree file used in

step 9

fixed the issue, but another one arose:

|

|

This is was an easy fix, again adding to bootargs, but this time

8250.nr_uarts=8. This line tells the 8250 serial driver to allocate up to 8

ports - the number doesn’t really matter that much here, but by default it is

one, and that’s not enough for the serial setup that I have.

Mounting the rootfs

It was only after fixing the serial console issues, that I could uncover the real issues - the kernel was panicking after all, I just couldn’t see it because of the lack of serial output:

|

|

This was an oversight on my part - the rootfs was definitely there on the second partition, but I hadn’t properly instructed the kernel where to find it. As a result, the kernel failed to locate the rootfs and triggered a panic.

This was fixed in the device tree file, by specifying the correct partition.

All it took was to add root=/dev/mmcblk1p2 rw rootwait to bootargs - this

way the kernel knows where the rootfs is, and will wait until it is mounted.

I thought that was the end of it for the rootfs mounting, but quickly realized

that it was never going to be this easy - turns out that this location must

also be specified on the rootfs itself, in /etc/fstab.

/etc/fstab is a Linux filesystem table - it’s a configuration table that’s

used by utilities such as mount and findmnt, and it’s also processed by

systemd-fstab-generator for automatic mounting during boot. /etc/fstab

lists all available disk partitions, and indicates how they are supposed to be

initialized/integrated into the filesystem.

Our Yocto environment generates this fstab file automatically. When using

Zarhus “normally” (aka. without the CROSSCON Hypervisor), the partitions

specified within line up with what the kernel expects.

But the process of integrating the CROSSCON Hypervisor changes a lot of files

on the first partition, and I suspect that those changes (specifically

combining our kernel with the device tree file with lloader) cause a mismatch

in what the kernel expects, and what’s actually in /etc/fstab.

All that needs to be done is to change /dev/mmcblk0p1 to /dev/mmcblk1p1

in the last line:

|

|

and the root filesystem gets mounted properly.

Issues logging in

I really thought it was the end of weird fixes by then, but there was one final one. I was running into problems when the kernel was booting without any errors, but suddenly freezing at some point. Initially I expected it to be a login issue, so I was looking at the login service and other related things.

This turned out to not be the cause after all - I got info that repeatedly printing a message in a specific abort handler within the Hypervisor fixes the issue of freezing and not being able to log in.

This is a Hypervisor related issue, and this is just a temporary workaround -

but it allows us to use the setup with rootfs and Zarhus. Any future

follow-ups regarding this will be in the corresponding

GitHub issue.

Summary

The result of all this debugging is a ready-to-follow guide on how a Zarhus setup with rootfs on the CROSSCON Hypervisor can be achieved.

It takes the user step-by-step on what changes need to be make in order to get this setup to work.

There are still things to be added - right now I am working on recipes

inside Yocto, that will provide us with utilities such as xtest, a

tee-supplicant service, and custom drivers that will let us interact with

the OPTEE-OS VM properly. This will be the next big step in integrating

Zarhus and the CROSSCON Hypervisor together.

Conclusion

The successful port of Zarhus to the CROSSCON hypervisor on the RPi4 will make life a lot easier when working with TA’s on the Hypervisor, or trying to execute security tests, or run any custom program. I hope that this will be a big leap forward in flexibility and productivity - quite a big part of the CROSSCON project is testing the whole stack, and it will be useful to be able to do that straight from the linux VM itself, including compilation and tweaking.

Also, since this is now a Yocto based setup, adding any new packages or

tools should be a breeze.

If you’ve been using the CROSSCON Hypervisor demo on the RPi and trying to test something on the Linux VM, there’s a high chance you found the minimal initramfs environment limiting. I suggest giving our setup a try - I am excited to see how developers might use this, and 3mdeb remains committed to expanding this solution.

For any questions or feedback, feel free to contact us at contact@3mdeb.com or hop on our community channels:

to join the discussion.