Introduction

The I/O memory management unit (IOMMU) is a type of memory management unit (MMU) that connects a Direct Memory Access (DMA) capable expansion bus to the main memory. It extends the system architecture by adding support for the virtualization of memory addresses used by peripheral devices. Additionally, it provides memory isolation and protection by enabling system software to control which areas of physical memory an I/O device may access. It also helps filter and remap interrupts from peripheral devices. Let’s have a look at IOMMU advantages, disadvantages, and how it is implemented from the perspective of hardware and software.

Advantages of IOMMU usage:

- One single contiguous virtual memory region can be mapped to multiple non-contiguous physical memory regions. IOMMU can make a non-contiguous memory region appear contiguous to a device (scatter/gather). Scatter/gather optimizes streaming DMA performance for the I/O device.

- Memory isolation and protection: device can only access memory regions that are mapped for it. Hence faulty and/or malicious devices can’t corrupt memory.

- Memory isolation allows safe device assignment to a virtual machine without compromising host and other guest OSes.

- IOMMU enables 32-bit DMA capable devices to access to > 4GB memory.

- Support hardware interrupt remapping. It extends limited hardware interrupts to software interrupts. Primary uses are the interrupt isolation and translation between interrupt domains (e.g. IOAPIC vs x2APIC on x86)

Disadvantages of IOMMU usage:

- Latency in dynamic DMA mapping, translation overhead penalty.

- Host software has to maintain in-memory data structures for use by the IOMMU

In hardware

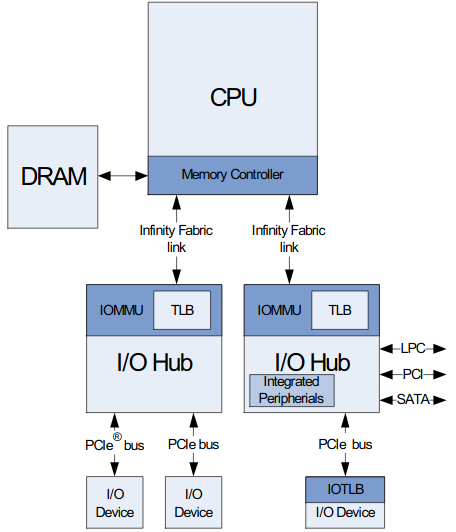

IOMMU’s architecture is designed to accommodate a variety of system topologies. There can be multiple IOMMUs located at a variety of places in the system fabric. It can be placed at bridges inside one buses or at bridges between buses of the same or different types. In modern systems, IOMMU is commonly integrated with the PCIe root complex. It connects to the PCIe bus from downstream and to the system bus (e.g. infinity fabric) from upstream. One example topology is shown below.

In software

The IOMMU is configured and controlled via two sets of registers. One in the PCI configuration space and another set mapped in system address space. Since the IOMMU appears to OS as a PCI function, it has a capability block in the PCI configuration space. Additionally, up to eight data structures are placed in the main system memory. These data structures contain information about the mapping of interrupts and memory for the usage of peripheral devices. Information about the device’s memory access permissions is stored there too.

Enabling

IOMMU is a generic name for technologies such as VT-d by Intel, AMD-Vi by AMD, TCE by IBM and SMMU by ARM. Make sure that your CPU supports one of these before you try to enable IOMMU.

UEFI/BIOS

First of all, IOMMU has to be initiated by UEFI/BIOS and information about it

has to be passed to the kernel in ACPI tables. For the end-user, that means that

you have to enter the UEFI/BIOS settings and set the IOMMU option to enabled.

For readers interested in developing firmware capable of IOMMU initialization,

the next post in this topic will describe the process of enabling IOMMU for PC

Engines apu2 in coreboot.

Linux kernel

Enable IOMMU support by setting the correct kernel parameter depending on the type of CPU in use:

- For Intel CPUs (VT-d) set

intel_iommu=on - For AMD CPUs (AMD-Vi) set

amd_iommu=on - Additionally if you interested in PCIe passthrough set

iommu=pt

After rebooting, you can check dmesg output to confirm that IOMMU is enabled

On Intel platforms:

|

|

Output should look somewhat like this:

|

|

On AMD platforms:

|

|

Output should look somewhat like this:

|

|

PCIe Passthrough

One of the most interesting use cases of IOMMU is PCIe Passthrough. With the help of the IOMMU, it is possible to remap all DMA accesses and interrupts of a device to a guest virtual machine OS address space, by doing so, the host gives up complete control of the device to the guest OS. It implicates that the host doesn’t have to virtualize this device nor translate communication between it and the guest, this almost completely removes performance overhead and latency caused by such translations. For example, by performing a passthrough of GPU it is possible to play games on Windows as a guest OS with performance unnoticeably different compared to the bare metal Windows. Another frequent use case is passing through a network interface card to use its full performance on the guest OS. The obvious drawback would be the inability to use passed devices by the host or other guests. Some solution to this problem is a technology called SR-IOV. It allows different virtual machines in a virtual environment to share a single PCI Express hardware interface, though very few devices support SR-IOV. It is almost exclusively available in server-grade devices. It is worth noting that not always IOMMU is capable of isolating a single device. Depending on the PCIe tree configuration, some devices are inseparable. If it is a case, those devices will be placed by the kernel in a single IOMMU group. These groups will be the topic of one of a future post.

Further reading

https://www.amd.com/system/files/TechDocs/48882_3.07_PUB.pdf

https://www.kernel.org/doc/html/latest/driver-api/vfio.html

https://wiki.archlinux.org/index.php/PCI_passthrough_via_OVMF

Summary

IOMMU is a useful device with many advantages. Among other things, It protects a system from DMA attacks and allows better isolation of virtual machines. Its main disadvantage is the latency of DMA transfers due to the need to check mapping and permission saved in main memory when the transfer occurs, but this latency can be largely alleviated by caching that information inside IOMMU. In summary, the use of IOMMU seems to be beneficial in almost every case.

If you think we can help in improving the security of your firmware or you

looking for someone who can boost your product by leveraging advanced features

of used hardware platform, feel free to book a call with

us or

drop us email to contact<at>3mdeb<dot>com. And if you want to stay up-to-date

on all things firmware security and optimization, be sure to sign up for our

newsletter: